Talk:IEEE 754/Archive 1

| This is an archive of past discussions about IEEE 754. Do not edit the contents of this page. If you wish to start a new discussion or revive an old one, please do so on the current talk page. |

| Archive 1 |

Article might benefit from outside views of the new standard

The present article is carefully-written and informative, but it appears to be the view 'as seen by members of the committee.' (It reads like an insider view, though there is nothing improper about that). Has the trade press commented on these activities? Are any companies planning to implement new chips based on it? Has there been any published criticism of the new standard? This kind of thing would be useful to add, if properly sourced. EdJohnston (talk) 16:38, 11 September 2008 (UTC)

- Absolutely -- I think the 754r article has been a 'holding pattern' for the new standard revision as it evolved. So that becomes a historical record of that revision. Soon (as soon as the new standard is actually available -- it was published on 29.08.2008) it will be time to put together a new 'IEEE 754' article that reflects the new standard. mfc (talk) 19:43, 12 September 2008 (UTC)

- An additional comment on the above -- the revision process was open: anyone could join the mailing list or attend the meetings (no membership of fees required), so there could not really be such a thing as an 'insider view'. More than 90 people participated in one or more meetings (some had 40+ attendeees), and over 100 voted in the ballots. And yes, there are three separate hardware implementations, to date. mfc (talk) 16:55, 28 September 2008 (UTC)

- Now the standard is finally available from the IEEE, it's probably time to start a new description of the standard for Wikipedia -- perhaps a working document first? mfc (talk) 16:55, 28 September 2008 (UTC)

- By an 'insider view', I only mean 'the view by the people who were creating the standard.' If a standard has impact in the real world, there should be people who were not part of the committee who have things to say about it. I know that the original IEEE FP standard had a lot of impact, since it greatly reduced the number of incompatible floating-point implementations that engineers had to program for.

- I agree. The current IEEE 754-2008 looks like just a rename/copy of the old IEEE 754r article, which was only about the revision process and a summary of changes. That is probably worth having (as a separate article?), but it's really not what should be in IEEE 754-2008 mfc (talk) 08:57, 29 September 2008 (UTC)

- By an 'insider view', I only mean 'the view by the people who were creating the standard.' If a standard has impact in the real world, there should be people who were not part of the committee who have things to say about it. I know that the original IEEE FP standard had a lot of impact, since it greatly reduced the number of incompatible floating-point implementations that engineers had to program for.

- User:mfc noted that there are three separate hardware implementations. Shouldn't that be mentioned in the article? Are these implementations documented anywhere? EdJohnston (talk) 17:49, 28 September 2008 (UTC)

- Also agree, and again not really much to do with the 'revision' article. For the record, the three hardware implementations are in POWER6 (full decimal floating-point unit), IBM System z9 (assists+millicode), and IBM System z10 (full decimal floating-point unit). For more details follow links from [1]. mfc (talk) 08:57, 29 September 2008 (UTC)

- User:mfc noted that there are three separate hardware implementations. Shouldn't that be mentioned in the article? Are these implementations documented anywhere? EdJohnston (talk) 17:49, 28 September 2008 (UTC)

Still not an 'outsider view', but to kick off a new write-up of the standard I have put together a replacement text. This is mostly new text, but with some from the old IEEE 754-1985 article. The existing text here I will move to IEEE 754 revision before replacing with the new text. All comments and (correct) edits welcome! There is plenty that can be improved. mfc (talk) 16:11, 3 October 2008 (UTC)

IEEE 854 redirects to here, but no mention of it is made within the article. Should a note be added to indicate that it has now merged with this standard? --Wm243 (talk) 13:52, 25 March 2009 (UTC)

- Good point – have so added. mfc (talk) 16:13, 27 March 2009 (UTC)

The IEEE 754 revision article mentions a half precision format, but no mention is made of it here. Was this dropped from the specification? --Wm243 (talk) 12:43, 17 April 2009 (UTC)

- It ended up being just one of the many interchange formats for binary, and is not a basic format. It is mentioned under 'Interchange formats'. mfc (talk) 14:59, 17 April 2009 (UTC)

VB.Net Conversion Code: IEEE754 to Hex to IEEE754

IEEE 754 to Hexadecimal Conversion...

Private Function ConvertHexToIEEE754(ByVal hexValue As String) As Single

Try

Dim iInputIndex As Integer = 0

Dim iOutputIndex As Integer = 0

Dim bArray(3) As Byte

For iInputIndex = 0 To hexValue.Length - 1 Step 2

bArray(iOutputIndex) = Byte.Parse(hexValue.Chars(iInputIndex) & hexValue.Chars(iInputIndex + 1), Globalization.NumberStyles.HexNumber)

iOutputIndex += 1

Next

Array.Reverse(bArray)

Return BitConverter.ToSingle(bArray, 0)

Catch ex As Exception

Throw New FormatException("The supplied hex value is either empty or in an incorrect format. Use the following format: 00000000", ex)

End Try

End Function

Hexadecimal to IEEE 754 Conversion...

Private Function ConvertIEEE754ToHex(ByVal SngValue As Single) As String

Dim tmpBytes() As Byte

Dim tmpHex As String = ""

tmpBytes = BitConverter.GetBytes(SngValue)

For b As Integer = tmpBytes.GetUpperBound(0) To 0 Step -1

If Hex(tmpBytes(b)).Length = 1 Then tmpHex += "0" '0..F -> 00..0F

tmpHex += Hex(tmpBytes(b))

Next

Return tmpHex

End Function —Preceding unsigned comment added by 59.160.73.114 (talk) 06:48, 11 November 2009 (UTC)

For more information please visit URL: http://weblogs.asp.net/craigg/archive/2006/09/25/Hexadecimal-to-Floating-Point-_2800_IEEE-754_2900_.aspx

- This does not strike me as approprioate material for the article and this page is for discussions for improving the aticle. Dmcq (talk) 13:35, 11 November 2009 (UTC)

Misleading table?

The digits column of the table in the basic formats section might be a little misleading. I wasn't sure if it meant binary or decimal until I looked at the Wikipedia page on doubles. I think the column should be relabeled "binary digits". —Preceding unsigned comment added by 24.41.57.147 (talk) 04:58, 10 August 2010 (UTC)

I think a second column should also be added that lists approximate numbers of decimal digits of precision. —Preceding unsigned comment added by 24.41.57.147 (talk) 05:13, 10 August 2010 (UTC)

- It wouldn't be then number of binary digits for the decimal formats. That's why the previous column gives the base. Didn't you wonder too about the +1 which is explained in the line just above the table? The standard doesn't give approximate decimal digits. The figures that could possibly be given are the various ones in IEEE 754-2008#Character representation. I'll stick a reference to that section under the table. Dmcq (talk) 09:14, 10 August 2010 (UTC)

- I Just had a look at that section and it might not be best for the purpose. I think it would be allowable for me to stick the value of log10 2prescision in the table. This would give about 7.2 for binary32 whereas only 6 digits of a decimal number might be recovered going to it and back again and yet 9 digits are needed in decimal to ensure the binary value is got back again. Dmcq (talk) 10:17, 10 August 2010 (UTC)

Distinguishing zeroes

It might be worth mentioning that +0 and -0 can be distinguished, in a language that does not offer direct access to the bit pattern but allows infinity, by comparing their reciprocals. If X is a number that is not NaN, one can always determine the sign of X by testing (X + 1/X). JavaScript is such a language, and in it parseInt of a signed zero String returns a signed zero Number. 82.163.24.100 (talk) 22:27, 26 October 2010 (UTC)

- And if X is a NaN? Don't encourage contorted programming practice / workarounds. If the sign needs to be determined, then use a function such as copysign. If the implementation doesn't have the facility, then it should be added to the implementation. Glrx (talk) 18:04, 27 October 2010 (UTC)

Citations etc.

Ran across this article mentioned in the Wikipedia:Articles for deletion/IEEE machine. Four articles on this subject is probably too many, but not user if we want one, two, or three. There is much material here, but very few inline citations, so seems mainly written from personal experience and thus hard to verify. IEEE floating point which might be the common name, redirects here, but it is the IEEE 754-1985 that has the diagrams and background that a general reader might be more interested in, so maybe we should redirect it back there, or else consider a merge. IEEE 754 revision seems mostly personal narrative, with only two inline links instead of citations. It looks like it was split off of this one in October 2008 or so. The articles are otherwise well-written, so not sure if a merge is worth it. It just now requires reading all three to make sense. From the first sentence in the lead, a reader would expect this to be an article on the standard in general, not one revision of it. There could be more in the future too. W Nowicki (talk) 18:15, 21 September 2011 (UTC)

I would also add that the only two inline citations are in the lead, and mention an alphabet soup that would be fairly meaningless to most readers. My guess is that the same format was approved by another standards body, but if so, that should be stated explicitly in the body, with a summary in the lead only. ISO/IEC/IEEE 60559:2011 ... JTC1/SC 25 ... ISO/IEEE PSDO is not English. Maybe somone should propose a Wikipedia written entirely in acronyms and stanards numbers. :-) W Nowicki (talk) 18:23, 21 September 2011 (UTC)

- My personal choice would be a single article titled with the common name, IEEE floating point, with a redirect for the standards number as being more accessible to most readers, but that could be just me. (Do we have a guideline that expresses a preference either way for common names versus standards numbers?) I also struggled some with the IEEE 754 revision article, wondering just what additional value it offered. Msnicki (talk) 18:27, 21 September 2011 (UTC)

- In some sense only one citation is needed -- to the actual Standard; all assertions in the Wikipedia article can be checked against that.

- On the articles IEEE 754-2008, IEEE 754 revision, and IEEE 754-1985 -- it might make sense to merge the first and third, but a lot of new material would need to be added to get the 2008 additions covered as well as the 'old' basics from 1985. This would make it very big and long -- perhaps a different structure altogether is needed: the (currrent) '2008' article as top-level, pointing to "IEEE 754 binary formats" and "IEEE 754 decimal formats" which detail the bits and bytes.

- I think the revision article is definitely best kept separate as it refers to the history and process rather than the content. Important stuff, because it shows that due diligence was done over the 7 years by dozens of people, but it is probably not what most readers will be looking for. mfc (talk) 13:08, 22 September 2011 (UTC)

- I agree that just merging the 2008 and 1985 articles would make a mess of things. The 2008 standard has a lot of additions and changes in it and the 1985 one is what most machines currently implement. You'd get an article with lots of ifs in it where a lot wasn't applicable to what's mainly out there. This article already refers to separate articles for more down to earth aspects like binary or decimal formats but it could do with a lot more linking to describe other things. Dmcq (talk) 13:54, 22 September 2011 (UTC)

Well if the 1985 standard is what is most common, then perhaps IEEE floating point should at least point there and not here? Given the much sadder state of some other standards articles, it probably makes sense to just work on some of the sourcing for now and avoid the major work of merges, until when and if there is a clear way to improve things. I never formally proposed a merge because it was not clear which way to go. What I am trying to avoid is a deletion fight when someone comes along and cites the rule that any unsourced material may be removed at any time. I do find the narrative of the group history interesting to keep, as long as it does not overlap this article too much. Maybe just rewrite the lead of this one a bit to clarify what we are saying here. Wikipedia articles should talk about the standard (e.g. cite estimates for how widely they are used) but need not be complete enough to allow someone to implement from the article - that is that the standards documents themselves are for, or other books and articles on the subject. W Nowicki (talk) 18:00, 24 September 2011 (UTC)

Examples

This article needs examples! — Preceding unsigned comment added by 82.139.196.68 (talk) 12:56, 19 February 2012 (UTC)

- Of what? The various formats are described in detail in other articles. Dmcq (talk) 13:04, 19 February 2012 (UTC)

Link to an Excel spreadsheet showing how to calculate to/from IEEE 754

This link was reverted with the explanation "Not a place to place tools. This site is for encyclopaedic information." I agree that it is not a place for tools, although there is already a link for an on-line calculator. Reference www.simplymodbus.ca/ieeefloats.xls The spreadsheet was not written to be a calculation tool. There are many more efficient calculators avaible for this purpose. It was written to show how the calculation is done. I use this spreadsheet to show others the details of the actual calcuation. The first tab (hex to float) shows how two 16 bit integers(words) are broken down into 4 bytes and then 32 bits, and then how those bits are used to determine the sign, exponent and mantissa that are used in an equation to calculate the floating point number. The second tab (float to hex) does the opposite. It shows how a floating point number is used to determine the sign, exponent and mantissa, and how these are converted into 32 bits, then 4 bytes and finally two words. I believe it is valuable for educational purposes and should be included Batman2000 (talk) 14:49, 18 March 2012 (UTC)

- I agree with the deletion. See WP:ELNO #8: WP doesn't want to link to material that requires external applications such as Excel. That other links exist is not an argument to include another link. Although I agree the current article does not do a good job of explaining or showing the bit encoding (IEEE 754-1985 makes an attempt), I don't see the Excel sheets being so helpful that I'd override #8. For our purposes, a few specific examples with text and a static diagram can be better than a program that can handle arbitrary values. Although the spreadsheet may help you to explain the encoding to others, the layout is busy, takes some effort to interpret, and begs some familiarity with Excel. The spreadsheet took a lot of commendable effort, but I don't think it is an appropriate external link for this article. Glrx (talk) 16:29, 18 March 2012 (UTC)

- If you click on any of the links for a particular format eg binary32 it takes you to a page with everything you'd want to know about it. Dmcq (talk) 23:01, 18 March 2012 (UTC)

Details please

- Question: In IEEE 754, which bits/bytes are used as the significand and the exponent? What code is used for infinity, and NaN? This detail should be included in the article, in case anyone's curious. 68.173.113.106 (talk) 21:06, 28 January 2012 (UTC)

- Click on the blue link for the particular format you're interested in to get details about it. Dmcq (talk) 23:09, 28 January 2012 (UTC)

- I agree that the details and examples in IEEE_754-1985 are very helpful. Should be included here or in a merged page. — Preceding unsigned comment added by 90.184.27.253 (talk) 13:23, 20 April 2012 (UTC)

- You can click on the links. You don't need everything in a singl article on the web when you have hyperlinks. The standard was much smaller in 1985, the decimal format needs a great deal of explaining and sticking all the stuff into this article as well as having separate articles is just unnecessary Dmcq (talk) 14:14, 20 April 2012 (UTC)

- I agree that the details and examples in IEEE_754-1985 are very helpful. Should be included here or in a merged page. — Preceding unsigned comment added by 90.184.27.253 (talk) 13:23, 20 April 2012 (UTC)

Expression evaluation

Concerning the sentences I added to Expression evaluation that were partially reverted, I have two comments: (1) I would agree that the C99 FLT_EVAL_METHOD as standardized only allows one to read the current preferredWidth and there is currently no standardized method to set it, which would be the ideal, and on rereading the relevant section of the current IEEE754 standard, it does indeed indicate that such a setting should be settable at block level (which would be great to see if/when some language implements that). However, given that the C99 FLT_EVAL_METHOD is the only standardized method that I am aware of (except perhaps Fortran 2003) to at least read the preferredWidth setting, I think it would be useful to the reader to reference it in some way-- perhaps "For C99, the FLT_EVAL_METHOD allows the reading of the current preferredWidth setting, although setting of this is not currently standardized."

(2) The second sentence that was reverted covers a separate important issue-- that the compiler bugs that have plagued the usage of double extended format (with compilers randomly not performing conversions to the destination format) is explicitly forbidden by the IEEE 754 standard (and the C99 standard). Many programmers are still not clear on this and so I think it would be very useful to add this sentence back--

For named variables, the language standard is required to respect, and convert to, the specified format and "implementations shall never use an assigned-to variable’s wider [preferredWidth] precursor in place of the assigned-to variable’s stored value when evaluating subsequent expressions". This removes a major source of inconsistency between language implementations. Brianbjparker (talk) 21:31, 6 April 2012 (UTC)

- I'm not keen on sticking in random bits of bad implementations into a discussion of he standard. That can go in the C99 article I guess. I'll stick that bit back in about the assigned variable. Dmcq (talk) 22:10, 6 April 2012 (UTC)

- Fair enough. Note that I have modified the text to clarify that preferredWidth is defining the format for temporary subexpression result variables within expressions. I think this is important to clarify as the current text could have been read as the calculation was at a higher precision but still rounded to a smaller internal temporary variable. Brianbjparker (talk) 14:25, 7 April 2012 (UTC)

- That's interesting. My reading of that indicates the standard mandates double rounding if one sets the preferred width to extended and one adds two doubles and assigns to a double if following their recommendation. That doesn't sound at all right to me. I must check up on that, it sounds a bit wrong to me. Dmcq (talk) 22:45, 6 April 2012 (UTC)

- Maybe I'm misreading it, but § 10.2 is not about preferredWidth (which is § 10.3) even though preferredWidth can play a part. It seems to be a save-the-programmer-from-himself provision when the intermediate values are higher precision than a final destination. An intermediate result (say a product) is computed and rounded to an extended format in an FP register. To store the result in the explicit final double destination dfX, it must be rounded a second time to a double. The statement in 10.2 prohibits the language from using the extended double value in the FP register for anything else. If the language did use the wider register value instead of the rounded dfX, then it might subsequently compare the double dfX with the extended FP register and decide they are different (due to rounding).

- Right-- the fact that the previous IEEE 754 standard didn't specify that such explicit assignments and rounding must be honored by the compiler was the main cause of varying behaviour between compilers and on changing optimization levels within a given compiler. Many compilers as an "optimisation" would avoid doing the final rounding to the destination, or would do additional roundings during an expression if registers were spilled such that the results were random. Brianbjparker (talk) 14:25, 7 April 2012 (UTC)

- Maybe I'm misreading it, but § 10.2 is not about preferredWidth (which is § 10.3) even though preferredWidth can play a part. It seems to be a save-the-programmer-from-himself provision when the intermediate values are higher precision than a final destination. An intermediate result (say a product) is computed and rounded to an extended format in an FP register. To store the result in the explicit final double destination dfX, it must be rounded a second time to a double. The statement in 10.2 prohibits the language from using the extended double value in the FP register for anything else. If the language did use the wider register value instead of the rounded dfX, then it might subsequently compare the double dfX with the extended FP register and decide they are different (due to rounding).

- In some situations, it can be a good idea. In other situations, it throws away some precision.

- Glrx (talk) 00:39, 7 April 2012 (UTC)

- Note that preferredWidth is different from the C99 FLT_EVAL_METHOD: In C99, FLT_EVAL_METHOD is chosen by the C implementation (not by the user), while in IEEE 754-2008, preferredWidth is chosen by the user ("preferredWidth attributes are explicitly enabled by the user [...]"). Vincent Lefèvre (talk) 00:44, 7 April 2012 (UTC)

- Ok, yes. FLT_EVAL_METHOD is read-only and setting it is currently undefined in C (several compilers allow it to be set at a compilation unit level by compiler options). However, FLT_EVAL_METHOD 0 corresponds to preferredWidthNone (evaluate to type) and FLT_EVAL_METHOD 1 and 2 are the other two possible preferredWidthFormats (presumably if setting of preferredWidth per block is ever implemented in C compilers, which I would like to see, then FLT_EVAL_METHOD would give the default setting). Brianbjparker (talk) 14:25, 7 April 2012 (UTC)

- Note that preferredWidth is different from the C99 FLT_EVAL_METHOD: In C99, FLT_EVAL_METHOD is chosen by the C implementation (not by the user), while in IEEE 754-2008, preferredWidth is chosen by the user ("preferredWidth attributes are explicitly enabled by the user [...]"). Vincent Lefèvre (talk) 00:44, 7 April 2012 (UTC)

- It seems my reading was correct, setting preferred width to extended may cause the add of two doubles producing a double to do double rounding. I guess one always has these corner cases however one sets standards. Dmcq (talk) 14:22, 20 April 2012 (UTC)

- Your statement seems confused. Setting preferredWidth to extended means adding two doubles results in an extended value (destination width is widest of operands and preferredWidth; extended is wider than double, so destination is extended; §10.3). If preferredWidth is set to none, then adding two doubles results in a double (destination width is that of the widest operand, which is a double). Setting pW to double would cause single + single to be rounded to double. Setting pW to single would still cause double + double to be rounded to double. Glrx (talk) 17:34, 21 April 2012 (UTC)

- What I'm talking about is

double a,b,c; ... a=b+c;If preferred width is set to extended then that will very possibly involve double rounding. Dmcq (talk) 20:41, 21 April 2012 (UTC)- Yes. Setting pW to extended can mean the result is rounded twice (aka double rounding). Adding

bandccould be first rounded to extended precision, and then the explicit assignment toawould be a second rounding to double precision. forgot to sign: Glrx (talk) 21:09, 21 April 2012 (UTC)

- Yes. Setting pW to extended can mean the result is rounded twice (aka double rounding). Adding

- What I'm talking about is

- Your statement seems confused. Setting preferredWidth to extended means adding two doubles results in an extended value (destination width is widest of operands and preferredWidth; extended is wider than double, so destination is extended; §10.3). If preferredWidth is set to none, then adding two doubles results in a double (destination width is that of the widest operand, which is a double). Setting pW to double would cause single + single to be rounded to double. Setting pW to single would still cause double + double to be rounded to double. Glrx (talk) 17:34, 21 April 2012 (UTC)

Suggest merge

The two revision steps of the standard should be combined at IEEE 754 to avoid duplicate and inconsistent description of the common material. This would also make for a better presentation of the diffrences and significance of the differences between the two revisions. Individual technical standards are rarely notable and technical steps within those standards are even less so. --Wtshymanski (talk) 14:16, 6 March 2012 (UTC)

- Currently, IEEE 754 is a redirection to IEEE 754-2008. Is this essentially a request for a name change? Benjaminoakes (talk) 14:10, 4 April 2012 (UTC)

- A little more than that is needed, I think. I would imagine a combined article would first describe the motives and development of 754, and give the common elements of the two standards. Then the specific limitations of the 1985 release that were addressed in the 2008 version should be shown, and the differences between them explained, with reference to any practical difference this caused. --Wtshymanski (talk) 21:19, 4 April 2012 (UTC)

- It seems to be a consistent practice in Wikipedia to have a main article on subjects with a revision history (e.g. COBOL) with sections on subsequent versions. I would concur with "Floating Point Standards" as a title and include information from ISO/IEC 10967. The progression of improvements to standards reflects on the advancement of the technology. I would like to see "How did we get to here?" -- Softtest123 (talk) 10:56, 27 April 2012 (UTC)

The redirect has variously been pointed at the -1985 and -2008 versions. I agree they should be merged. However, a more recognizable and precise title would make sense. The article is not so much about that standard document as about its contents, so I'd say that "IEEE standard floating point" would make more sense than any of the current redirects such as "IEEE floating point standard". Or just "IEEE floating point" would be good, as someone suggested already. Dicklyon (talk) 16:19, 7 April 2012 (UTC)

- I agree with the idea that the title should suggest the article content and therefore concur with "IEEE floating point" but would also agree with "IEEE standard floating point". I still concur with the merge of this article with IEEE 754-1985. Softtest123 (talk) 21:41, 2 June 2012 (UTC)

- I'll start an RM discussion at the end of this page. Dicklyon (talk) 21:43, 2 June 2012 (UTC)

Requested move

- The following discussion is an archived discussion of a requested move. Please do not modify it. Subsequent comments should be made in a new section on the talk page. No further edits should be made to this section.

The result of the move request was: moved. Looks like there's also consensus to merge, but that doesn't require an admin. Jenks24 (talk) 05:07, 10 June 2012 (UTC)

IEEE 754-2008 → IEEE floating point – Per earlier discussions on the talk page, the page should be given a meaningful name and the other standard date article should be merge in. Dicklyon (talk) 21:45, 2 June 2012 (UTC)

- Support. I don't think there's anything much more than the History section that can be copied over. Most of the rest is duplicates of stuf fin other articles that are referenced here. There is room for extra here though based on detailing better the change between the revisions. Dmcq (talk) 22:25, 2 June 2012 (UTC)

- Support. General name/article is better. Glrx (talk) 03:44, 3 June 2012 (UTC)

- Support. Per WP:COMMONNAME, "Wikipedia does not necessarily use the subject's "official" name as an article title; it prefers to use the name that is most frequently used to refer to the subject in English-language reliable sources." The common name is "IEEE floating point", not the spec number. Msnicki (talk) 15:48, 3 June 2012 (UTC)

- How about the other question about the merge? Dmcq (talk) 15:54, 3 June 2012 (UTC)

- Support move and merge. Having all these separate articles is confusing. It makes more sense to merge them into one with a better name. If covering all the different revisions would make this article too large it would be better to split it into a main article and History of the IEEE floating point standard than to create a separate article for each revision. —Ruud 20:52, 3 June 2012 (UTC)

- Support move and merge. There is additional information in Floating point that maybe should be in this article instead. That article should be aligned with this new one. Softtest123 (talk) 00:45, 4 June 2012 (UTC)

- Support move and merge, agree with Softtest123's suggestion about moving appropriate bits from Floating point here and with Dmcq's suggestion about improving the coverage of the changes between revisions. 1exec1 (talk) 17:11, 4 June 2012 (UTC)

- The above discussion is preserved as an archive of a requested move. Please do not modify it. Subsequent comments should be made in a new section on this talk page. No further edits should be made to this section.

Other merges

- The following discussion is closed. Please do not modify it. Subsequent comments should be made in a new section. A summary of the conclusions reached follows.

- This merge discussion encompasses 3-4 separate wikipedia articles, all of which contain a lot of very technical and detailed information. It appears, based on the discussion below, that this is NOT a trivial merge discussion between two pages and is, in fact, a discussion on a major reorganization and rewrite of the information on the topic. Due to the highly technical nature of this topic, it's unlikely that you're going to find a "merge editor" willing to do the work for you (WP:MERGE really doesn't work that way). So due to the fact that this is not a merge discussion but more a discussion on reorganization, I am removing the merge tags and closing the merger discussion as no consensus. WTF? (talk) 17:12, 2 June 2013 (UTC)

Merges from IEEE 754-1985 and IEEE 754 revision have been proposed. I support the former because there is a lot over overlap between the this article and IEEE 754-1985. I don't support dumping all revision information in IEEE 754 revision into this article. I think this topic can be handled in WP:SUMMARY style. --Kvng (talk) 17:49, 22 August 2012 (UTC)

- I think IEEE 754 revision needs to be rewritten in a more encyclopedic tone (see for example IEEE 754 revision#Clause 6: Infinity, NaNs, and sign bit, this is what you would expect to see in the "Revision Overview" section of the actual specification, not in an encyclopedic article). In a more concise form it would probably belong here as well. —Ruud 20:10, 22 August 2012 (UTC)

- Support merging all three. Better to have one well-written article than to split our efforts among three. --Guy Macon (talk) 07:58, 23 August 2012 (UTC)

- Perhaps the fist thing to do is to edit IEEE 754 revision. I agree with Ruud that it needs work. Once that's finished, a merge might be more palatable to me. --Kvng (talk) 18:50, 24 August 2012 (UTC)

- Support: I see no reason why these cannot all be merged into a single article. A section on history could detail development and differences, etc. I also am adding IEEE 854-1987 to the mix proposing it also for merging as it is a short article and was superseded by IEEE 754-2008 as well. 50.53.15.59 (talk) 11:50, 9 December 2012 (UTC)

If it's wrong can someone fix it?

Why does the layout example have the following statement at the end?

"The fractional parts are wrong in both these examples. The first one should be 1.171875 rather than 1.34375."

DGerman (talk) 02:08, 13 September 2013 (UTC)

- The comment about the values being wrong was added on 9 Sep 2013, and the section was moved into this article on 25 Aug 2013 (apparently it had been at Audio bit depth).

- I checked that the comment is correct at least as far as the first example goes (fraction is 1.171875 not 1.34375; the latter would occur if the fraction started with a single zero rather than two). Given that the example is new and incorrect, and that Single-precision floating-point format has a properly formatted and correct example (and is linked in "binary32"), I have removed the section as unnecessary. Johnuniq (talk) 04:00, 13 September 2013 (UTC)

- The first one must be a copy error. The second one is correct as far as I can tell. I did both with a computer program and I just double-checked it. I assume the IP was too lazy to count all the way to that 20th 1 to actually check both problems. Radiodef (talk) 05:08, 23 September 2013 (UTC)

Rename to “IEEE 754”

Do rename kindly. There are many IEEE standards, and the only uniform and consistent way to cover them all on Wikipedia is to name each article after the technical standard name. There is no reason whatsoever to think up our own names when there are official names to them, so we will use those as we naturally should. Anyone interested in the verbose standard name won't die from a little effort of obtaining the standard's text and reading it. I would rename myself right away, but I don't have an account, nor do I plan to have it in the first place. —176.193.211.123 (talk) 18:09, 2 June 2014 (UTC)

- Please read the "Suggested move" section above. Do you have any reason to believe that the consensus has changed in the two years since we had that discussion? --Guy Macon (talk) 18:54, 2 June 2014 (UTC)

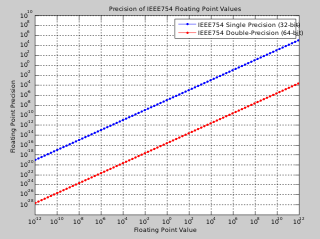

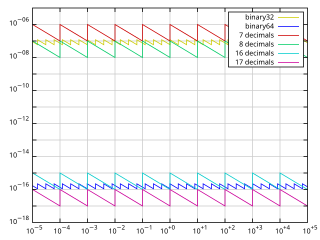

Graph of precision

On 12 September, User:Ghennessey added a graph showing the precision of floating-point numbers (defined here as ulp(x)) as a function of value (x). I think such a graph is a very good idea, as it helps visualize the actual precision one can expect from float32 v.s. float64. The graph has however a few shortcomings, which I addressed by replacing it with a new graph. Then Ghennessey reverted my edit, with the argument that his original version “is of greater practical use” because it “can be used to select an appropriate format given the expected value of a number and the required precision”. I do not agree with this argument, and here I am explaining why. For reference, here are both graphs:

-

Ghennessey’s version: Precision of binary32 and binary64 in the range 10-12 to 1012.

-

My version: Relative precision of binary32 and binary64 formats, compared with decimal representations using a fixed number of significant digits. Relative precision is defined here as ulp(x)/x, where ulp(x) is the unit in the last place in the representation of x, i.e. the gap between x and the next representable number.

The main difference between the graphs is that my version shows the relative precision (defined as ulp(x)/x), while Ghennessey’s shows the absolute precision (i.e. ulp(x)). Both are relevant metrics of precision, but I argue that relative precision is more useful.

First, floating point numbers are designed to provide an almost constant relative precision across the whole range. This is in contrast to fixed-point, which provides a known absolute precision. Clearly, floating-point has proved to be more useful, as fixed-point is now seldom used outside embedded systems lacking an FPU. Programming with fixed-point is actually difficult, because one has to be aware of the required absolute precision and range at every intermediate step of every computation. The almost-constant relative precision of floating-point makes programming easier. This, in itself, is a proof that relative precision is more useful than absolute precision. Also, in the table before the graph, precisions are compared to numbers of decimal digits, which is a very intuitive way of thinking of precision, and is actually all about relative precision.

Then, there are a few other points which are a lot clearer on my graph:

- The precision curves on Ghennessey’s graph look like linear functions of the value, whereas they are actually step functions. My version clearly shows the discontinuities: the relative precisions are shown to be sawtooth functions. It then appears that the relative precisions of floating-point formats are not strictly constant, but instead oscillate within narrow ranges.

- I added the precision curves for selected numbers of decimal digits. This makes clear why it is difficult to compare a binary floating point precision to a number of decimal digits: the decimal precision is also a sawtooth function, with a wider range of variation, and is thus less consistent that binary floating point.

- There is an apparent contradiction in the article, where the precision of float32 is quoted to be about 7.22 decimal digits, and yet the section Character representation states that 9 decimal digits are required to properly store a float32 in decimal. My graph lifts the contradiction, showing that the precision of float32 lies mostly between 7 and 8 decimal digits, but occasionally gets better than 8 decimals (or rather, 8 decimals gets worse than float32).

In conclusion, my version provides a more comprehensive view of the precision one can expect from floating point numbers, including its discontinuities, not only in binary but also in decimal. Thus I will be reverting Ghennessey’s revert unless a discussion here shows some consensus against it.

— Edgar.bonet (talk) 09:20, 22 September 2014 (UTC)

- Your graph has more useful information in it. However, as things stand, both graphs are probably difficult for non-experts to interpret properly. Yours, having more information, may be more difficult. I think it may be better to present the information available in the graphs as text. We need to improve the Formats section and perhaps add a separate section or sub-section to explain precision. Once that is done, readers may be in a better position to appreciate a graph like this. ~KvnG 13:45, 25 September 2014 (UTC)

- I'll second Kvng — both graphs are technical, dense, and repetitive. To first order, both graphs say there's a first order relative error and a second order variation of that error due to the magnitude of the number. The first point is trivially stated (and the obvious trend in the first graph), and the second point does not seem that important. How many calculations are going to depend on that flutter? Can people scale calculations all the mantissas have several leading one bits? (Goes against log distribution of leading digits.) If scaling could be done, what impact would it have on computation? It's only squeezing an extra bit of precision. Glrx (talk) 18:13, 30 September 2014 (UTC)

Any reason William Kahan isn't mentioned?

Any reason William Kahan isn't mentioned? Espertus (talk) 18:34, 30 September 2016 (UTC)

- He is mentioned in our IEEE 754-1985 article. Should we merge the two articles into one that prominently mentions William Kahan? --Guy Macon (talk) 21:40, 30 September 2016 (UTC)

- I don't think the articles should be merged. IEEE 754-1985 should mainly be historical. IEEE floating point should focus on the current standard. But there could be a short section on the history, where William Kahan could be mentioned. Vincent Lefèvre (talk) 23:32, 30 September 2016 (UTC)

- I agree. --Guy Macon (talk) 04:22, 1 October 2016 (UTC)

binary80

An entry for binary80 was recently added to the interchange formats table. As I don't have a copy of the standard, I can't look to see if this is listed. I do note that this format is not the 80 bit format used by Intel, as that format does not have a hidden 1. I know that the standard allows for and/or encourages extra precision for intermediate values, presumably leading to the Intel 80 bit format. The question here is interchange formats. Gah4 (talk) 16:03, 6 October 2016 (UTC)

- The storage width must be a multiple of 32 (except for binary16). So, binary80 is not possible. Vincent Lefèvre (talk) 20:44, 6 October 2016 (UTC)

- It is not an interchange format. The standard wants intermediate calculations done with more dynamic range, but it does not specify a format for those intermediate values. Glrx (talk) 18:13, 11 October 2016 (UTC)

256 bit AVX

Is it true that AVX supports 256 bit floats? And if it does i guess they would be in the style of ieee 754, or are they about to become part of the standard? --178.2.195.152 (talk) 18:08, 4 December 2011 (UTC)

- No, what it support is doing a number of floating point operations in parallel, the V stands for vector which in effect means a number at a time. Dmcq (talk) 18:21, 4 December 2011 (UTC)

- I think the IBM z10 series implements various 128 bit floating point formats in hardware and some older IBM and VAX systems also supported 128 bit foat though I believe they used a bit of software or microcode support via doubles. Dmcq (talk) 18:33, 4 December 2011 (UTC)

- Quoting the FMA instruction set Article:

- The FMA instruction set is the name of a future extension to the 128-bit SIMD instructions in the X86 microprocessor instruction set to perform fused multiply–add (FMA) operations

- Both contain fused multiply–add (FMA) instructions for floating point scalar and SIMD operations.

Sounds to me as there where 128 bit registers that could be just either for SIMD operations or for FMA for floats that would be 128 bit long. Or am i missunderstanding things? --178.6.206.24 (talk) 18:33, 5 December 2011 (UTC)

- Two symmetrical 128-bit FMAC (fused multiply–add capability) floating-point pipelines per module that can be unified into one large 256-bit-wide unit if one of integer cores dispatch AVX instruction and two symmetrical x87/MMX/SSE capable FPPs for backward compatibility with SSE2 non-optimized software

Or does it simply mean that four 64 bit floats are processed at the same time? --178.6.206.24 (talk) 18:38, 5 December 2011 (UTC)

The operations deal with a number of floating point operations at a time, for instance one can do eight single precision or four double precision operations at a time with the 256 bit registers. The bit about the two 128 bits being used as a 256 bit is that for two cores on a chip their floating point power can be shared rather than spending a pile of silicon on separate parallel floating point units for the 256 bit vectors. Dmcq (talk) 20:57, 5 December 2011 (UTC)

- The IBM S/360 model 85 implements a 128 bit extended precision (IBM name) format in hardware, except that DXR (divide extended) is done though software emulation. (IBM determined that DXR wasn't used often enough to make the hardware implementation worthwhile.) The same format is implemented in all S/370 models that implement floating point. It is base 16, like all S/360 and S/370 floating point, with an exponent field in both halves, simplifying software emulation. Software emulation is provided for other S/360 models.) Somewhere in the ESA/390 years, binary (IEEE 754 format) floating point was added, including appropriate 128 bit formats. Later z/Architecture models implement IEEE standard decimal floating point, with single (64 bit) and double (128 bit) formats. VAX has optional hardware (microcode) and software emulation for H-float, a 128 bit format. Gah4 (talk) 10:23, 18 June 2017 (UTC)

Requested move 16 June 2017

- The following is a closed discussion of a requested move. Please do not modify it. Subsequent comments should be made in a new section on the talk page. Editors desiring to contest the closing decision should consider a move review. No further edits should be made to this section.

The result of the move request was: page moved. Andrewa (talk) 22:21, 23 June 2017 (UTC)

IEEE floating point → IEEE 754 – This is much more commonly used to refer to the standard (391,000 results) as opposed to the current name (126,000 results). The current title is almost never used as a name for the standard, only in a phrase such as "IEEE floating point standard" or "IEEE floating point numbers". The name "IEEE 754" is widely known and covers all versions of the standard. I would argue it is the actual WP:COMMONNAME here. nyuszika7h (talk) 09:25, 16 June 2017 (UTC)

- Given that IEEE 754 has been adopted as ISO/IEC/IEEE 60559:2011, perhaps this article should (in fact) be renamed to ISO/IEC/IEEE 60559 ? Andrew D Banks (talk) 10:42, 16 June 2017 (UTC)

- There is WP:COMMONNAME which suggests that the title should be what people usually call it, even though other names would be more accurate, or technically correct. There are some articles where I disagree with the title, though maybe after some years the old name won't be so common anymore. I think enough people can remember 754, but not so many 60559. A redirect would make sense, though. Gah4 (talk) 10:10, 18 June 2017 (UTC)

- Since there is a redirect, I am opposed, though not strongly, to the change. As well as I know it, the hit numbers from Google are not reliable indicators. Gah4 (talk) 22:40, 18 June 2017 (UTC)

- Comment stats Glrx (talk) 15:32, 18 June 2017 (UTC)

- Comment: Better stats. Not what I expected to find. —BarrelProof (talk) 17:22, 18 June 2017 (UTC)

- Support per nom. Yeah, "IEEE 754" is the WP:COMMONNAME, as BarrelProof's ngram demonstrate. No such user (talk) 15:31, 19 June 2017 (UTC)

- Comment: There's no copyleft-significant history at the target, but there's one interesting entry 02:42, 24 November 2007 KelleyCook (talk | contribs | block) . . (27 bytes) (+27) . . (moved IEEE 754 to IEEE 754-1985: it's real name -- which possibly will soon we can have a new page at IEEE 754-2008.) So the original IEEE 754 page is now at IEEE 754-1985. Andrewa (talk) 22:15, 23 June 2017 (UTC)

- The above discussion is preserved as an archive of a requested move. Please do not modify it. Subsequent comments should be made in a new section on this talk page or in a move review. No further edits should be made to this section.

emin and emax

I removed the recent addition:

- The emin = 1 − emax requirement can be explained by the fact that one wishes emin ≈ emax and that it has the following property: if x is a normal number, then 1/x is less than the largest representable finite number in magnitude, thus avoiding an overflow. And the constant 1 is due to parity reasons: for the formats defined in the original IEEE 754-1985 standard (only binary formats), the number of available values for the exponent is even, so that emin and emax needed to have a different parity to be able to use all of these values.

as it is more complicated than indicated, and also is tending toward WP:OR. First, comparing exponent values without indicating the base or position of the radix point in the significand isn't useful. There are an even number of exponent values because two have special meaning in the binary formats. Is there a reference to the actual thought process that went into exponent values in IEEE 754-1985? (Personally, I think denormals were a bad idea, but nobody asked me.) Gah4 (talk) 16:54, 8 October 2018 (UTC)

- I agree that this is a bit WP:OR (not exactly, because here one has the result and the goal is to explain it), though there are some intuitive reasons behind the explanation. What would be WP:OR is whether emin = 1 − emax is better than something else. Concerning the comparison of the exponent values, I was trying to be concise, but apparently this was too concise. These were the exponents with the same meaning as used just before (this is important). And the reason behind emin ≈ emax is actually that the reciprocal of the minimum positive normal number is approximatively the maximum normal number (= maximum finite number). With the chosen notation for the exponents, the minimum positive normal number is bemin and the maximum normal number is very close to bemax+1. This actually gives emin ≈ −1 − emax (but note the ≈). Thus some people are surprised by the choice emin = 1 − emax (i.e., with 1 instead of −1). The intuitive explanation is that it is better to avoid overflows (because by default, one would get an infinity) than to avoid underflows close to the threshold (one would just lose 1 or 2 digits of precision). To avoid overflows, one needs emax ≥ −emin. With the parity constraint, this gives emax ≥ 1 − emin. Then, to minimize the loss of precision in the underflow when inverting large numbers (such as the maximum normal number), one gets emax = 1 − emin, i.e. emin = 1 − emax.

- I had tried to find a reference in the stds-754 discussions, but could not find. And what happened before 1985 has probably been lost. There will probably be some official document (rationale) explaining the background of IEEE 754 (2018 version) some time in the near future. I'm not sure whether the above point will be covered. This won't be the discussion that lead to IEEE 754-1985, but at least it will have some official status. Vincent Lefèvre (talk) 17:48, 8 October 2018 (UTC)

- Much of the IEEE standards are available if you pay for them, but not free. Yes emin ≈ emax, but that still leaves +/- a few. For comparison, in Hexadecimal floating point base is 16, emin is -64 and emax is 63. This allows values between 16-65 and almost 1664. The hexadecimal point is just to the left of the MSD.(That way they can call it a fraction.) VAX floating point formats F_float and G_float are similar to IEEE single and double, not counting byte order (which IEEE doesn't define anyway), except that the binary point is just to the left of the MSB. Also, the exponent range is symmetric, with emin = - emax. You might write to Kahan, and see what he says about it. He might be able to give some references, too. Though WP prefers secondary references over primary references, he might have some of each. But as I noted, you need to include the position of the radix point, in addition to emin and emax. There are also some formats with the radix point just to the right of the LSD. Gah4 (talk) 03:15, 9 October 2018 (UTC)

- The old 1985 standard is available for free. Concerning the 2008 standard, I have it for free because I participated in the revision. The latest draft of the 2018 revision (P754/D2.41) is available for free on http://speleotrove.com/misc/. Concerning Hexadecimal floating point, actually IBM hexadecimal floating point, these are IBM formats, not conforming with any of the IEEE formats. The rules for emin and emax are different, just because IBM made different choices; these ones have their own advantages and disadvantages. For instance, the reciprocal of a normalized number (e.g., 16−65) can give an overflow (note: the maximum value is almost 1663, not 1664). The only plausible reason for which IEEE chose a different rule for the exponents (i.e., for the minimum and maximum positive normal numbers) is to avoid this overflow. Note about the position of the radix point: this is just a convention and is not associated with a format (in IEEE 754-2008, two such conventions are used, the usual one with the radix point after the first digit, with the exponent denoted e, and the one with the radix point after the last digit (LSD), with the exponent denoted q). Vincent Lefèvre (talk) 09:55, 9 October 2018 (UTC)

- Yes on most of those. At some point, exponent bias (offset in exponent range) is convention, but it is usual to fix the values. I knew that the ethernet standards were available, but I didn't know about this one. I did e-mail Kahan, but being retired he doesn't check e-mail so often. Yes both IBM HFP and VAX formats are older than IEEE 754, but I wasn't sure if you were suggesting general requirements of floating point formats. Without a reference, no matter how obvious it seems, it is still WP:OR. Well, you are allowed obvious arithmetic. (You don't need a reference for 2+2=4.) If Kahan replies, that would be nice. Otherwise, it is the ideas of the designers. Gah4 (talk) 10:52, 9 October 2018 (UTC)

Merge of IEEE 754-related chapter from "Floating-point arithmetic" into here?

Hi, some while back it was proposed to move most of the contents of the chapter "Floating-point arithmetic#IEEE 754: floating point in modern computers" from that article into here and merge it with the existing contents. Apparently there was no discussion regarding this, perhaps because there was a parallel move request (see above).

I would support such a slight reorganization of contents. Basically, it wouldn't change the general structure but just sharpen the scope of the articles at bit more:

- Floating-point arithmetic is a generic concept article discussing all kinds of floating-point schemes (past and present), leaving the discussion of specific details to other articles

- IEEE 754 (or IEEE floating-point standard) is a generic article for IEEE floating-point related information, past, present and future

- ... various other articles discuss different aspects of the IEEE floating-point standards in better details

- ... various other articles about other floating-point standards (including legacy ones)

- IEEE 754 (or IEEE floating-point standard) is a generic article for IEEE floating-point related information, past, present and future

If carried out carefully I think this would improve the presentation of the information. People who wish to look up info on the IEEE standard would not have to read through unrelated stuff, and people interested in the floating-point concept "as is", historical implementations or alternatives would not have to be bothered with specifics of the IEEE standard. --Matthiaspaul (talk) 20:19, 11 July 2018 (UTC)

- Support - I assume you'd leave a WP:SUMMARY of this article in Floating-point arithmetic. ~Kvng (talk) 14:47, 14 July 2018 (UTC)

- Yes, of course. --Matthiaspaul (talk) 14:07, 15 July 2018 (UTC)

- Strongly disagree - In modern day usage floating point is overwhelmingly IEEE 754, so a floating point article that did not discuss the high level IEEE754 concepts to the depth required for correct floating point usage in practice, as the current article does, would be deficient. The separate IEEE754 article goes into much greater detail of minutiae required more for more specialised usage- implementation, formal proofs etc. which is the correct layout in my opinion. 71.105.36.73 (talk) —Preceding undated comment added 14:56, 27 November 2018 (UTC)

`totalOrder`

I added the python code in order to actually give people the algorithm. If you want to make it non-python, that's fine, but it should be the actual algorithm that appears in the IEEE 754 text. The english description is both incomplete. — Preceding unsigned comment added by GBGamer117 (talk • contribs) 07:39, 25 December 2018 (UTC)

- It is nowhere said in the standard that it gives an algorithm, and standards normally do not give an algorithm, but describe the behavior. Here, this is just a list of properties. This list was meant to be complete, thus redundant, in the sense that the values of both totalOrder(x,y) and totalOrder(y,x) are given for the same pair (x,y), even though one implies the other one (exactly one is true and one is false if x≠y, since this operation is specified as being a total ordering). However, currently only totalOrder(−NaN,y) and totalOrder(x,+NaN) are given, not their opposite cases. Thus this will not work as an algorithm. But how the standard should be interpreted is that the missing cases are specified via the general "total ordering" property instead of being undefined. I hope that this will be clarified (by explicitly adding the missing cases) in the next revision for 2019, even though we are at the stage of the latest drafts: After your edit with the python code, I mentioned the issue in the stds-754 list. Vincent Lefèvre (talk) 11:03, 25 December 2018 (UTC)

- I would call the description of an algorithm. It might not be exactly code, or anything, but it describes a sequence of actions to take. GBGamer117 (talk) 21:43, 25 December 2018 (UTC)

- No, it doesn't. In particular, "if and only if" is not the kind of things you find in an algorithm. Ditto for "negative sign orders below positive sign". You would need some translation to get an algorithm. And this also applies to totalOrder(−NaN,y) and totalOrder(x,+NaN). But the translation into an algorithm is not straightforward: this can be done in several ways, giving different algorithms. Vincent Lefèvre (talk) 23:13, 25 December 2018 (UTC)

- I would call the description of an algorithm. It might not be exactly code, or anything, but it describes a sequence of actions to take. GBGamer117 (talk) 21:43, 25 December 2018 (UTC)

tables

There are some tables, giving details of the representations, in IEEE 754-1985 that aren't here. I wonder if they should be? They seem useful to understand the standard. Gah4 (talk) 02:24, 7 March 2019 (UTC)

Restore link to ungated draft of the standard?

In a recent edit, an editor took out the link to a draft of the FP standard hosted at validlab.com, which is dated 2007. Since viewing of the current standard at the IEEE site is only available to subscribers, I suggest that the link to the ungated draft be restored to the article. EdJohnston (talk) 14:17, 26 July 2010 (UTC)

- The draft has a copyright notice on its face. "Permission is hereby granted for IEEE Standards Committee participants to reproduce this document for purposes of international standardization consideration." Not clear that public dissemination fits that description. Glrx (talk) 16:21, 26 July 2010 (UTC)

- Yes I agree, from the statements they put on the document it unfortunately looks like even putting a link to a copy would conflict with WP:COPYLINK. Dmcq (talk) 18:45, 26 July 2010 (UTC)

- In the edit mentioned above, the motivation for removing the link to the obsolete version was out of concern that people might use or rely on outdated information in the draft version that may have changed in the final version. I didn’t know that access to the standards was restricted (the links worked when I tried them; I hadn’t considered my institution has a campus-wide subscription to IEEE Xplore). Although the link was admittedly not removed for copyright reasons, I think Dmcq read the policy right: “Knowingly and intentionally directing others to a site that violates copyright has been considered a form of contributory infringement in the United States (Intellectual Reserve v. Utah Lighthouse Ministry).” That said, those whose institutions don’t subscribe to IEEE Xplore (such as students in developing countries) can always Google for an illegitimate copy of the standard on the net; it’s not Wikipedia’s obligation to provide that service. Btw, for those in the U. S., buying the standard isn’t really that crazy an idea. I once sent away for a paper copy of the 754-1985 standard for an undergrad processor design project; it was the best $56 I ever spent. —Technion (talk) 10:15, 27 July 2010 (UTC)

The link to draft standard has been reintroduced, and I have been reverting it for the same reasons given above. There is no indication that IEEE has given permission for unlimited public release of the draft. I don't like that the standard isn't freely available, and I don't like removing the link to the draft. Unfortunately, I don't think the link is permitted. Please don't reintroduce the link without getting consensus on this page. Glrx (talk) 19:22, 13 December 2010 (UTC)

Many other draft standard documents are freely available, even when the final document is not free. Can someone figure out officially if this one should be available by link? (Maybe ask IEEE?) Gah4 (talk) 13:24, 24 September 2011 (UTC)

- Any more on this one? Gah4 (talk) 02:19, 7 March 2019 (UTC)

(Removed link was here)

- I meant any more on the distributability of the documents? In many cases, draft versions, but not the final version, of a standard are allowed to be distributed. In some, you are allowed to give out links, but the end user has to download from the original source. I e-mailed what seems to be the owner of ucbtest, and also W. Kahan, about redistribution. Will see if any replies come back. Gah4 (talk) 21:30, 7 March 2019 (UTC)

- These documents have already legally been made public by the 754R working group on http://754r.ucbtest.org/web-2008/ (this is the original source, as being the web site of the working group). Vincent Lefèvre (talk) 21:55, 7 March 2019 (UTC)

- More specifically, it isn't a random site with copies of copyrighted material, but the actual site where they are stored. (Actually, you just said that, but to be doubly sure.) here is the description of what is on the site and such. It even has a link to IEEE 754-2008 as a place for public commentary! Gah4 (talk) 22:36, 7 March 2019 (UTC)

- Another evidence is that the 754r.ucbtest.org links (the old ones, without "web-2008", which was added due to the new revision for 2019) are visible in this e-mail (which was publicly available on the IEEE web site, until a cleanup of the mailing-list archives). Vincent Lefèvre (talk) 22:45, 7 March 2019 (UTC)

- Reply from the owner of ucbtest.org: I think anything is copyright to the creator. However, items on ucbtest.org can be freely distributed, referenced, and linked to. As always, credit the original author(s) if they are identified in the text. Gah4 (talk) 01:52, 8 March 2019 (UTC)

- More specifically, I got a reply from the head of 754WG, if that matters. ucbtest.org seems to be their web site. Gah4 (talk) 09:31, 8 March 2019 (UTC)

- "I think anything is copyright to the creator." That's not how copyright works. A work for hire belongs to the principal/organization rather than the agent/person who wrote the text.

- This is not one of "many cases". On the face of the work is an IEEE copyright claim. Is there any dispute about IEEE's copyright? The IEEE has granted the committee a limited right to distribute the work for the purpose of furthering work on the standard; that was quoted above. The IEEE did not give the committee the right to publish drafts to the world at large.

- Getting some third-hand and third-party hearsay statement that it is OK to link is worthless. What do you think the IEEE would say if asked? And WMF would want any evidence of permission to go through the ticket system rather than an editor saying he got an email from the IEEE saying it was OK.

- To put a finer point on it, the apparent purpose of including links to drafts on this WP page has nothing to do with characterizing the history of the draft development. Instead, it is about going around IEEE's copyright. Hey, you don't have to buy the standard because you can get a copy of the draft that is 99.9% of the standard. To be clear, I dislike the ANSI and IEEE approach to standardization: form a committee, charge the committee members a steep fee, get the members to donate their time to develop the standard, and then charge an arm and a leg for copies of the standard. I also dislike what IEEE did to the BSTJ. I much prefer IETF and W3C's model. It does not cost much to distribute a PDF. That said, what I want and what is so are different beasts.

- Glrx (talk) 20:03, 8 March 2019 (UTC)

- It isn't some third-hand or third-party. I heard it from the chair of 754WG, and the files are posted on the 754WG official web site. First hand and first party. (Well, second party if you include me. But still first hand since it is the official web site.) From WP:COPYLINK Since most recently-created works are copyrighted, almost any Wikipedia article which cites its sources will link to copyrighted material. It is not necessary to obtain the permission of a copyright holder before linking to copyrighted material, just as an author of a book does not need permission to cite someone else's work in their bibliography. (It seems that one purpose of the web site is to distribute the IEEE 754 test suite which is named ucbtest, I suspect because it came from UCB.) People who build actual hardware can't build it 99.9% correct, so likely have to buy the official version. (Imagine the lawsuit where your answers are wrong due to the 0.1% difference.) This is true, and well known, for some of the ANSI standards, too. Gah4 (talk) 20:56, 8 March 2019 (UTC)

- You are confused about what copylink means. If X has a copyrighted website, then I may not copy the site's content, but I may link to the site. If Y's site is illegally publishing X's content, then I may not copy the site's content nor link to the site. There is no evidence that Y has permission to publish the drafts to the entire world; the domain is not an IEEE domain nor is it under the control of the IEEE. The website could have a legitimate purpose for the members of the committee, but to the extent it publishes the drafts to the entire Internet, it is violating IEEE's copyright. Nowhere does the IEEE say the committee may publish drafts to the entire world. The IEEE could easily issue a DMCA takedown notice to the site. If WMF publishes the link, WMF could be the target of a DMCA takedown. Glrx (talk) 21:28, 8 March 2019 (UTC)

- I just removed items from the Further reading section that have copyright notices on them. The chair of 754WG seems to believe that they can be published for the world, as that is their website. There is no attempt to hide them. Having the copyright notice is necessary so that those who download them know that they are copyrighted and, for example, don't try to sell as drafts or final versions. Since I asked, 754WG could have decided that they weren't supposed to be there, and taken them down. I suppose I can try writing to the president of IEEE, but most large organizations delegate authority so that not all questions go to one person. 754WG could have said that they didn't know about the status, but they also didn't do that. Gah4 (talk) 21:52, 8 March 2019 (UTC)

- Concerning the Further reading section, some items were legal: some without links (except references to the publisher's site), some with a URL belonging to the author of the article (even though the copyright is own by some publisher, the author has the right to put the article on his website or some public archive like HAL / "archives ouvertes"). Concerning the standard drafts, the members of the working group, who wrote the draft, never transferred the copyright to IEEE (well, at least I had never done), so that even IEEE copyright could be disputed. Vincent Lefèvre (talk) 22:39, 8 March 2019 (UTC)

Wrong statements regarding memory representation

> and under the standard the explicitly represented part of the significand will lie between 0 and 1

Isn't the significand an integer? --Lumbricus (talk) 18:07, 25 February 2019 (UTC)

- Not necessarily. It depends on the convention chosen to define the exponent. The IEEE 754-2008 standard itself uses two of such conventions (the ISO C standard uses a third one). In the definition of a significand by the IEEE standard: "A component of a finite floating-point number containing its significant digits. The significand can be thought of as an integer, a fraction, or some other fixed-point form, by choosing an ppropriate exponent offset." Vincent Lefèvre (talk) 19:39, 25 February 2019 (UTC)

- Many previous representations have the significand between 0 and 1, sometimes calling it a fraction. IEEE-754 is very similar to the VAX floating point formats, except for changing the meaning of the significand by a factor of 2, so with the hidden bit the normalized forms are between 1 (inclusive) and 2 (exclusive). There are also some floating point formats, I believe CDC is one, where the significand is an integer. With appropriate normalization rules, integer values have the same bit representation in floating point. Gah4 (talk) 02:30, 7 March 2019 (UTC)

- Every time I saw the term fraction, it was with a different meaning: the part of the significand after the implicit bit, i.e. what corresponds to the trailing significand field in IEEE 754-2008, where the significand is 1.f for normal numbers and 0.f for subnormals. So the fraction f is between 0 and 1, but for normal numbers, the significand is between 1 and 2. Vincent Lefèvre (talk) 14:36, 7 March 2019 (UTC)

- BTW, the article IEEE 754-1985 uses the term fraction with the meaning of trailing significand field. Vincent Lefèvre (talk) 14:40, 7 March 2019 (UTC)

- And the term fraction was defined in the IEEE 754-1985 standard: "fraction. The field of the significand that lies to the right of its implied binary point." Thus this should be regarded as the only correct meaning. FYI, the definition of the significand in the 754-1985 standard: "significand. The component of a binary floating-point number that consists of an explicit or implicit leading bit to the left of its implied binary point and a fraction field to the right." Vincent Lefèvre (talk) 14:49, 7 March 2019 (UTC)

- The first floating point format I learned, and for some years the only one, is IBM_hexadecimal_floating_point. This format is base 16, with the hexadecimal point just to the left of the hexadecimal fraction (IBM term). With the implied 1, the IEEE representation is an improper fraction. Oh well. Gah4 (talk) 01:42, 9 March 2019 (UTC)

Misleading statement

Currently, the page asserts "For the binary formats, the representation is made unique by choosing the smallest representable exponent allowing the value to be represented exactly." But this is incorrect. For example, the decimal value 0.2 cannot be represented exactly using this (binary format) representation.

- (don't forget to sign your post with four ~)

- The statement is related to normalization, not to exact representation of non-binary fractions, but I still agree that it isn't quite right. The decimal formats can represent non-normalized values, that is with zero digits on the left. Binary formats, with the hidden one, can't. There should be a better way to say it, though. Gah4 (talk) 19:20, 6 October 2019 (UTC)

- Note that the absence of comma before "allowing" is important. Instead of giving additional information, it gives a restriction (restrictive clause). Perhaps add "of a floating-point number" after "the representation"? Alternatively, say that when the exponent is not emin, the first digit must be 1, which makes the representation unique. Vincent Lefèvre (talk) 22:21, 6 October 2019 (UTC)

Exception handling

"Exception handling", What about "Denormal" exception? —Preceding unsigned comment added by 149.156.67.102 (talk) 15:06, 15 December 2008 (UTC)

- There is no 'denormal exception' in IEEE 754. The five possible exceptions are:

- Invalid operation

- Division by zero

- Overflow

- Underflow

- Inexact

- Underflow occurs when a non-zero result is both subnormal and inexact. mfc (talk) 16:55, 15 December 2008 (UTC)

- 1985 version states underflow is when subnormal and inexact, but the 2008 version doesn't seem to require inexact? — Preceding unsigned comment added by 77.99.44.113 (talk) 14:53, 16 April 2018 (UTC)

Still about "Exception handling", a note concerning the "Division by zero". The 1985 definition was: "If the divisor is zero and the dividend is a finite nonzero number, then the division by zero exception shall be signaled." As new operations were added in the IEEE 754 revision, it has been chosen to change this definition to include other operations with similar behavior (e.g. log(0)). The new definition is: "The divideByZero exception shall be signaled if and only if an exact infinite result is defined for an operation on finite operands." Vincent Lefèvre (talk) 20:28, 12 February 2012 (UTC)

- Why is it worth sticking that in? It doesn't describe the conditions for all the other exceptions never mind in such detail. There's tons more worthwhile stuff like the IEEE 754 1985 article has, it might be worth moving much of the common stuff there about operations to a common article. Dmcq (talk) 21:02, 12 February 2012 (UTC)

- The page was giving explanations for the invalid, overflow and underflow exceptions. For consistency, it should also give an explanation for division by zero, in particular because the name of this exception can be misleading. Saying that the exceptions were the same as in the old standard was also misleading. I agree that there should be a common article. The changes could be mentioned on IEEE_754_revision. Vincent Lefèvre (talk) 23:36, 12 February 2012 (UTC)

- On the business of log(0) it does occur to me that it is worth noting that the exceptions have been extended to functions in general as seems appropriate. I see the standard now says that functions should set inexact correctly so it really is going for doing it all exactly right. Dmcq (talk) 20:16, 22 February 2012 (UTC)

Underflow

The as of 2018-11-21 states

- Underflow: a result is very small (outside the normal range) and is inexact. Returns a **subnormal or zero** by default.

This is unclear: does it mean that zero is the default? or is the default subnormal or zero depending on other conditions? under what conditions does it return one or the other? I would also prefer “too small to represent” to “very small (outside the normal range)” PJTraill (talk) 21:40, 21 November 2018 (UTC)

- I've clarified the text. But note that "too small to represent" is more ambiguous than "outside the normal range". Vincent Lefèvre (talk) 22:07, 21 November 2018 (UTC)

As far as I know, some implementations do denormal (or subnormal) in software emulation. The hardware detects the problem, generates the interrupt, and software generates the appropriate value. It was years ago that I knew that, so maybe none does by now. It was especially interesting in some parallel implementations, where everything stopped for one interrupt. It would be interesting to know, and add to the article, if some implementations do that. Gah4 (talk) 20:02, 18 October 2022 (UTC)

722 digits

There is some discussion in comp.lang.fortran that IEEE 754 requires conversion of textual representations with 722 digits to the exactly rounded value. Specifically, the value that is half way between the lowest, and next to lowest, subnormal. Reading this article, it only requires converting internal binary form to printable decimal, and then back to exactly the same internal binary value. Much less than 722 digits. If the standard does require 722 digits, then this article should mention it. Gah4 (talk) 02:30, 18 October 2022 (UTC)

- There is no such requirement. — Vincent Lefèvre (talk) 11:47, 18 October 2022 (UTC)

- @Gah4: Here's what the standard says: "There might be an implementation-defined limit on the number of significant digits that can be converted with correct rounding to and from supported binary formats. That limit, H, shall be such that H ≥ M + 3 and it should be that H is unbounded." The value of M depends on the largest supported binary format: it is 17 for binary64, 36 for binary128, and larger if larger binary formats are supported. So there is nothing about 722 digits. There is a "should" for any number of digits. Perhaps the "722 digits" corresponds to the most difficult case for correct rounding to binary64 (double precision), but I would say that's rather something like 752 digits. — Vincent Lefèvre (talk) 22:35, 18 October 2022 (UTC)

- The specific case is between the smallest, and next to smallest, subnormal. I don't know if that is worst case. Or maybe I counted wrong. The M+3 makes sense to me. Well, I remember back to days before anyone thought about this. Gah4 (talk) 01:33, 19 October 2022 (UTC)

- @Gah4: This is probably the worst case, because each time you divide by 2, you may get an additional decimal digit, in particular for the negative powers of 2 (the last decimal digit being always 5): 0.5, 0.25, 0.125, 0.0625, etc. A significant digit sometimes disappears on the left, but less often. — Vincent Lefèvre (talk) 13:39, 19 October 2022 (UTC)

- It seems that the actual answer is that some systems used the Windows library routines, instead of the ones that might have gone with the compiler. But the reason I ask here was, more generally, what is reasonable, and what does the standard require. Also, the 722 is significant digits, so not counting all the zeros between the decimal point and the first non-zero digit. It should be about 0.3 decimal digits per bit, so about 0.7 significant digits per bit. It seems that some routines give the smallest normalized value for this case. Gah4 (talk) 21:50, 19 October 2022 (UTC)

- @Gah4: More precisely, for 2−n, this is n·log(5)/log(10) digits (your "per bit" is rather meaningless). — Vincent Lefèvre (talk) 23:06, 19 October 2022 (UTC)

- It is, but the question is the two smallest subnormal values, which are both 2**(-n) values. Why it is those values, in the original comp.lang.fortran post, was not stated. It isn't completely obvious that input routines should allow for subnormal values, and when they do, to so many digits. Gah4 (talk) 08:20, 5 November 2022 (UTC)

- @Gah4: More precisely, for 2−n, this is n·log(5)/log(10) digits (your "per bit" is rather meaningless). — Vincent Lefèvre (talk) 23:06, 19 October 2022 (UTC)

- It seems that the actual answer is that some systems used the Windows library routines, instead of the ones that might have gone with the compiler. But the reason I ask here was, more generally, what is reasonable, and what does the standard require. Also, the 722 is significant digits, so not counting all the zeros between the decimal point and the first non-zero digit. It should be about 0.3 decimal digits per bit, so about 0.7 significant digits per bit. It seems that some routines give the smallest normalized value for this case. Gah4 (talk) 21:50, 19 October 2022 (UTC)